162 class(MpiRunControlType) :: this

164 integer(I4B) :: i, j, id, irank

165 integer(I4B) :: nr_models, nr_exgs, nr_remotes, max_nr_remotes

166 type(STLVecInt) :: remote_models, remote_exgs

167 integer(I4B),

dimension(:, :),

pointer :: remote_models_per_process

168 integer(I4B),

dimension(:, :),

pointer :: remote_exgs_per_process

169 class(VirtualModelType),

pointer :: vm

170 class(VirtualExchangeType),

pointer :: ve

171 type(MpiWorldType),

pointer :: mpi_world

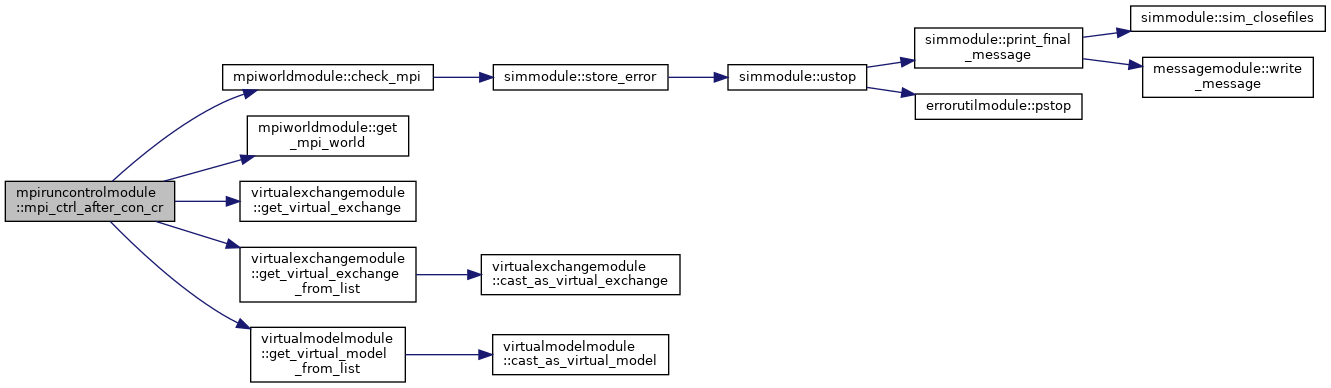

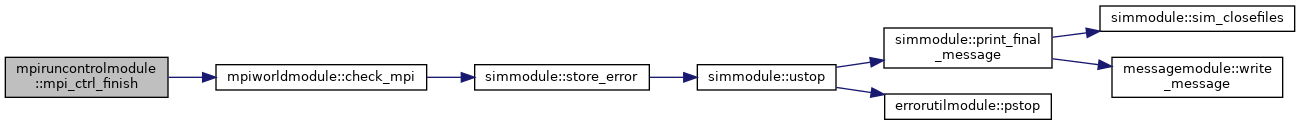

174 mpi_world => get_mpi_world()

177 call this%RunControlType%after_con_cr()

180 call remote_models%init()

184 if (vm%is_active .and. .not. vm%is_local)

then

186 call remote_models%push_back(vm%id)

189 call remote_exgs%init()

193 if (ve%is_active .and. .not. ve%is_local)

then

195 call remote_exgs%push_back(ve%id)

200 nr_remotes = remote_models%size

201 call mpi_allreduce(nr_remotes, max_nr_remotes, 1, mpi_integer, mpi_max, &

202 mpi_world%comm, ierr)

205 allocate (remote_models_per_process(max_nr_remotes, nr_procs))

206 remote_models_per_process = 0

209 do i = 1, remote_models%size

210 remote_models_per_process(i, proc_id + 1) = remote_models%at(i)

212 call mpi_allreduce(mpi_in_place, remote_models_per_process, &

213 max_nr_remotes * nr_procs, mpi_integer, mpi_max, &

214 mpi_world%comm, ierr)

219 do j = 1, max_nr_remotes

220 id = remote_models_per_process(j, i)

224 if (vm%is_local)

then

227 call vm%rcv_ranks%push_back_unique(irank)

234 nr_remotes = remote_exgs%size

235 call mpi_allreduce(nr_remotes, max_nr_remotes, 1, mpi_integer, mpi_max, &

236 mpi_world%comm, ierr)

239 allocate (remote_exgs_per_process(max_nr_remotes, nr_procs))

240 remote_exgs_per_process = 0

243 do i = 1, remote_exgs%size

244 remote_exgs_per_process(i, proc_id + 1) = remote_exgs%at(i)

246 call mpi_allreduce(mpi_in_place, remote_exgs_per_process, &

247 max_nr_remotes * nr_procs, mpi_integer, mpi_max, &

248 mpi_world%comm, ierr)

253 do j = 1, max_nr_remotes

254 id = remote_exgs_per_process(j, i)

258 if (ve%is_local)

then

261 call ve%rcv_ranks%push_back_unique(irank)

268 call remote_models%destroy()

269 call remote_exgs%destroy()

271 deallocate (remote_models_per_process)

272 deallocate (remote_exgs_per_process)

type(listtype), public virtual_model_list

type(listtype), public virtual_exchange_list

class(virtualexchangetype) function, pointer, public get_virtual_exchange_from_list(list, idx)

class(virtualexchangetype) function, pointer, public get_virtual_exchange(exg_id)

Returns a virtual exchange with the specified id.

class(virtualmodeltype) function, pointer, public get_virtual_model_from_list(model_list, idx)

The Virtual Exchange is based on two Virtual Models and is therefore not always strictly local or rem...